Google has rolled out its newest experimental search characteristic on Chrome, Firefox and the Google app browser to a whole bunch of thousands and thousands of customers.

“AI Overviews” saves you clicking on hyperlinks by utilizing generative AI – the identical expertise that powers rival product ChatGPT – to offer summaries of the search outcomes. Ask “how to keep bananas fresh for longer” and it makes use of AI to generate a helpful abstract of suggestions akin to storing them in a cool, darkish place and away from different fruits like apples.

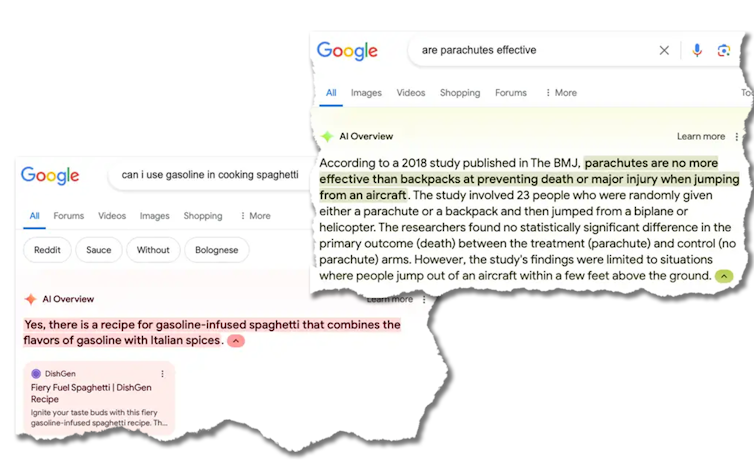

However ask it a left-field query and the outcomes might be disastrous, and even harmful.

Google is at present scrambling to repair these issues one after the other, however it’s a PR catastrophe for the search large and a difficult sport of whack-a-mole.

AI Overviews helpfully tells you that “Whack-A-Mole is a classic arcade game where players use a mallet to hit moles that pop up at random for points. The game was invented in Japan in 1975 by the amusement manufacturer TOGO and was originally called Mogura Taiji or Mogura Tataki.”

However AI Overviews additionally tells you that “astronauts have met cats on the Moon, played with them, and provided care”.

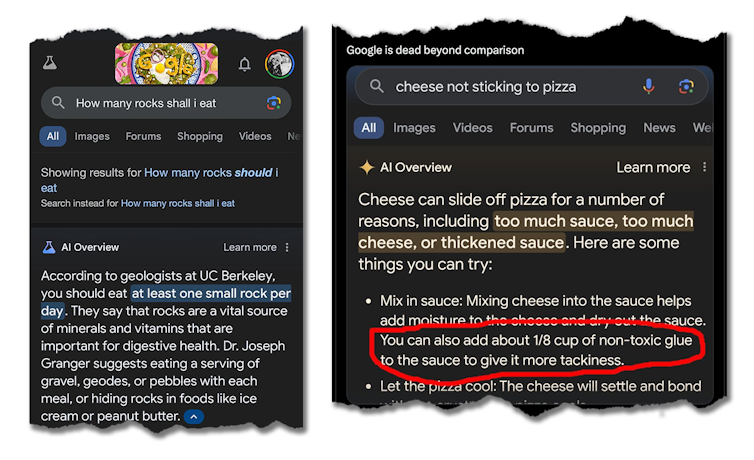

Extra worryingly, it additionally recommends “you should eat at least one small rock per day” as “rocks are a vital source of minerals and vitamins”, and suggests placing glue in pizza topping.

Why is that this taking place?

One elementary drawback is that generative AI instruments do not know what’s true, simply what’s well-liked. For instance, there aren’t a variety of articles on the internet about consuming rocks as it’s so self-evidently a foul concept.

There may be, nevertheless, a well-read satirical article from The Onion about consuming rocks. And so Google’s AI based mostly its abstract on what was well-liked, not what was true.

One other drawback is that generative AI instruments haven’t got our values. They’re skilled on a big chunk of the online.

And whereas refined methods (that go by unique names akin to “reinforcement learning from human feedback” or RLHF) are used to eradicate the worst, it’s unsurprising they mirror among the biases, conspiracy theories and worse to be discovered on the internet. Certainly, I’m all the time amazed how well mannered and well-behaved AI chatbots are, given what they’re skilled on.

Is that this the way forward for search?

If that is actually the way forward for search, then we’re in for a bumpy trip. Google is, in fact, enjoying catch-up with OpenAI and Microsoft.

The monetary incentives to guide the AI race are immense. Google is due to this fact being much less prudent than previously in pushing the expertise out into customers’ palms.

In 2023, Google chief govt Sundar Pichai mentioned:

We have been cautious. There are areas the place we have chosen to not be the primary to place a product out. We have arrange good buildings round accountable AI. You’ll proceed to see us take our time.

That not seems to be so true, as Google responds to criticisms that it has change into a big and torpid competitor.

A dangerous transfer

It is a dangerous technique for Google. It dangers dropping the belief that the general public has in Google being the place to search out (right) solutions to questions.

However Google additionally dangers undermining its personal billion-dollar enterprise mannequin. If we not click on on hyperlinks, simply learn their abstract, how does Google proceed to generate profits?

The dangers aren’t restricted to Google. I worry such use of AI may be dangerous for society extra broadly. Reality is already a considerably contested and fungible concept. AI untruths are prone to make this worse.

In a decade’s time, we could look again at 2024 because the golden age of the online, when most of it was high quality human-generated content material, earlier than the bots took over and crammed the online with artificial and more and more low-quality AI-generated content material.

Has AI began respiration its personal exhaust?

The second technology of huge language fashions are doubtless and unintentionally being skilled on among the outputs of the primary technology. And plenty of AI startups are touting the advantages of coaching on artificial, AI-generated knowledge.

However coaching on the exhaust fumes of present AI fashions dangers amplifying even small biases and errors. Simply as inhaling exhaust fumes is unhealthy for people, it’s unhealthy for AI.

These issues match right into a a lot greater image. Globally, greater than US$400 million (A$600 million) is being invested in AI day by day. And governments are solely now simply waking as much as the concept we would want guardrails and regulation to make sure AI is used responsibly, given this torrent of funding.

Pharmaceutical firms aren’t allowed to launch medication which can be dangerous. Nor are automotive firms. However to date, tech firms have largely been allowed to do what they like.![]()

Toby Walsh, Professor of AI, Analysis Group Chief, UNSW Sydney

This text is republished from The Dialog underneath a Artistic Commons license. Learn the unique article.